Contents

One thing we can learn from the latest fatal Tesla accident: AI and machine learning products need very careful designing. In this article, I will go through the seven basic AI UX principles products should follow.

With the arrival of AI products, we enter a new era where machines start to behave differently. They not only perform our orders, but they do things by themselves. This will change how people react, how we behave and what we expect from these products.

As designers, we aim to create useful, easy-to-understand products in order to bring clarity to this shady new world of machine learning. Most importantly, we want to use the power of AI to make people’s lives easier and more joyful.

So let’s see how we can achieve these with good (UX) design. Here follow my seven principles of AI UX.

AI UX Principle #1

Differentiate AI content visually

In many cases, we use AI and machine learning to dig deep into data and generate new and useful content for ourselves. These can come in the form of movie recommendations on Netflix, translations in Google Translate, or sales predictions in CRM systems.

AI generated content can prove extremely useful for people, but in some cases, these recommendations and predictions need greater accuracy. AI algorithms have their own flaws, especially when they don’t have enough data or feedback to learn from.

We should let people know if an algorithm has generated a piece of content so they can decide for themselves whether to trust it or not. Thus reads the first principle of AI UX.

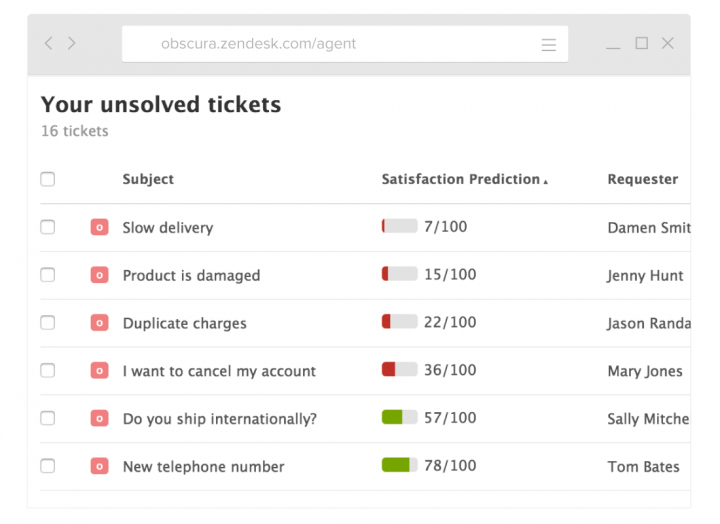

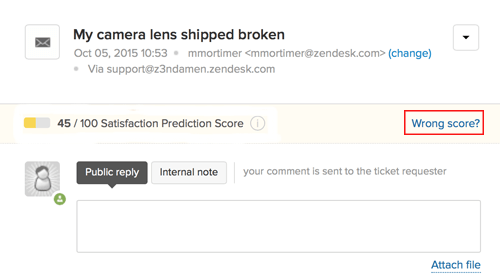

In the image above you can see Zendesk’s AI prediction for support tickets. It clearly identifies this as a prediction, so people know what to expect in that column.

In the image above you can see Zendesk’s AI prediction for support tickets. It clearly identifies this as a prediction, so people know what to expect in that column.

Firebase, a tool for mobile developers, labels the predicted data with a magic wand icon. Here they also provide information about the accuracy for the prediction, and users can also set the risk tolerance. Of course, this tool serves engineers who know more about machine learning. Everyday people would not necessarily understand “High Risk Tolerance”. But the magic wand still handily highlights AI content.

AI UX Principle #2

Explain how machines think

Artificial intelligence often looks like magic: sometimes even engineers have difficulty explaining how the machine-learning algorithm comes up with something. We see our job as a UX company as helping people understand how machines work so they can use them better.

This doesn’t mean we should explain how a convolutional neural network functions in a simple photo search up. But we should give users hints about what the algorithm does or what data it uses.

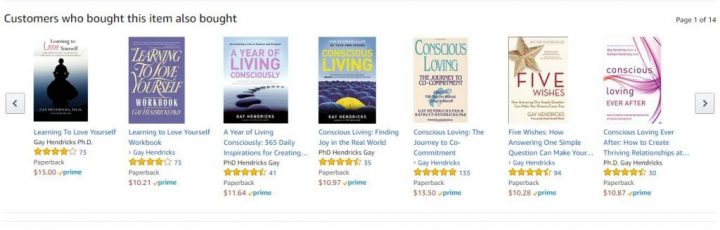

A good old example comes from e-commerce, where we explain why we recommend certain products. These recommendation engines were the first AI UX many people encountered, many years ago.

Self-driving cars provide another good example of AI UX. To build trust in passengers, we suggest putting a screen in the cars where everyone can check what the car sees around itself. Check out this example design from our self-driving lab project.

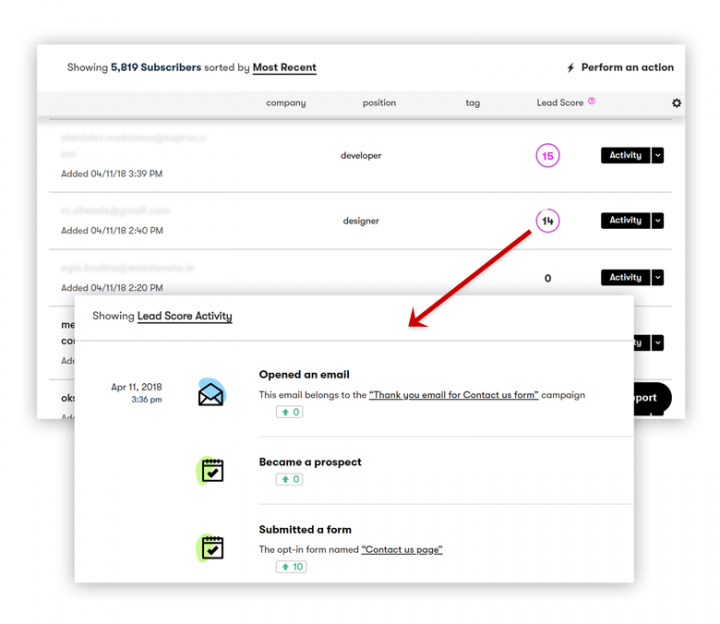

Last but not least, let’s take a look at Drip, an email marketing tool. Not an AI product per se, it has a scoring function which shows newsletter subscriber engagement. Clicking on the score, you get a detailed list of actions which explains why people got their score.

AI UX Principle #3

Set the right expectations

In the deadly Tesla accident mentioned above, the driver probably trusted the Autopilot system too much.

Unlike other self-driving technologies like Google’s cars, Tesla’s autopilot does not have enough sophistication to navigate complex situations, so drivers must keep their hands on the wheel, even when using Autopilot. This driver didn’t follow these instructions, regardless of getting multiple visual and voice notifications. Probably he thought the car could handle the driving itself.

We must set the right expectations, especially in a world full of sensational, superficial news about new AI technologies.

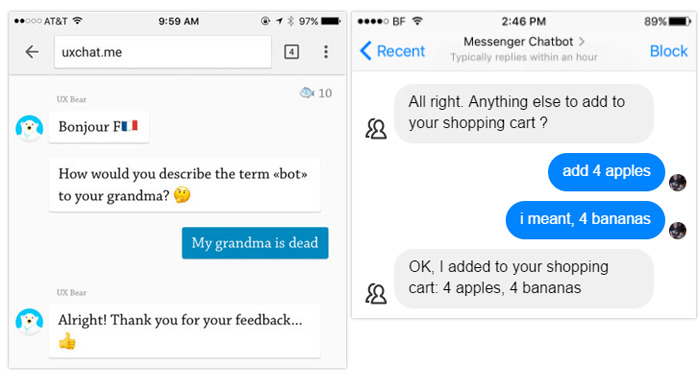

Some chatbots use messages to clarify their level of advancement. In this case, we try to lower the expectations with a nice copy and a kind character for the bot.

AI UX Principle #4

Find and handle weird edge cases

AI can generate content and take actions no one had thought of before. For such unpredictable cases, we have to spend more time testing the products and finding weird, funny, or even disturbing or unpleasant edge cases.

Many funny and rude chatbot examples abound where the bot didn’t understand the context or people gave them simple, but unexpected, commands.

Many stories about Amazon’s Alexa are coming out too. Once, it ordered a dollhouse just because it heard a conversation about it on the radio. In another example, a passport checker bot didn’t accept photos of Asian people because “Their eyes were closed”.

Extensive testing in the field can help minimize these errors. Clear communication about the products capabilities can help humans understand these unexpected situations.

Designers have to provide developers with information about user expectations, too. They can fine-tune the algorithms to prevent bad responses. In many cases they play with the trade-off between precision and recall.

Optimizing for recall means the machine-learning product will use all the right answers it finds, even if it displays a few wrong answers. Let’s say we build an AI that can identify Picasso paintings. If we optimize for recall, the algorithm will list all the Picasso paintings, but some van Goghs will appear in the results too.

Optimizing for precision means the machine learning algorithm will use only the clearly correct answers, but it will miss some borderline positive cases. It will show only Picasso paintings (with no van Goghs), but it will miss some Picassos. It won’t find all the correct answers, only the clear cases.

When we work on AI UX, we help developers decide what to optimize for. Providing meaningful insights about human reactions and human priorities can prove the most important job of a designer in an AI project.

AI UX Principle #5

Provide engineers with the right training data

Creating an AI product from the engineering side usually takes these three high-level steps:

- Finding the best AI algorithm for your task.

- Feeding the AI training data. The AI learns from this data and creates a model it will use in the live product. In our example above, the training data would include a lot of paintings and the name of the painter for each.

- Publishing the product. It will use the model trained before doing certain things for the users. It might also collect new data for later use, re-training the model and improving its own performance.

So, you really need training data. UX people help collect training data and define the expected outcome people want to see from the AI product.

Sometimes defining expected outcomes comes easy. Things start to get more difficult with outcomes subjective to the users. Which Netflix film recommendations really prove useful? The UX team aims to understand people and define these criteria.

Engineers will need training data, specifically well-defined outcomes for different inputs they can feed into the machine learning algorithm. Google reportedly hires “content specialists”, experts in the domain of the product who help build this training data set.

After collecting an initial data set, the engineers can train the algorithm and we can start doing user tests with early prototypes. With these tests, we double check the first trained models to see how they perform with real users.

In an AI project, you will need even closer collaboration between developers and designers.

If you are a Product Manager who wants to learn more about how to achieve this, read our free UX e-book for Product Managers!

AI UX Principle #6

User testing for AI products (default methods won’t work here)

Testing the UX of AI products can prove much more difficult than for regular apps. These apps mainly promise to provide personalized content, but you hardly can emulate that with some dummy stuff in a wireframe. Two great methods can work though: Wizard of Oz testing and personal contents.

During Wizard of Oz studies, someone emulates the product’s response from the background. It very often tests chatbots with a human being answering each message, pretending the bot is writing.

You can also use the test participant’s personal content in test situations. Ask for their favorite musicians and songs, and use them testing a music recommendation engine. This tests people’s assumptions and how they react to good and bad recommendations very well.

AI UX Principle #7

Provide an opportunity to give feedback

The user experience of AI products gets better and better if we feed more data into the machine learning algorithms.

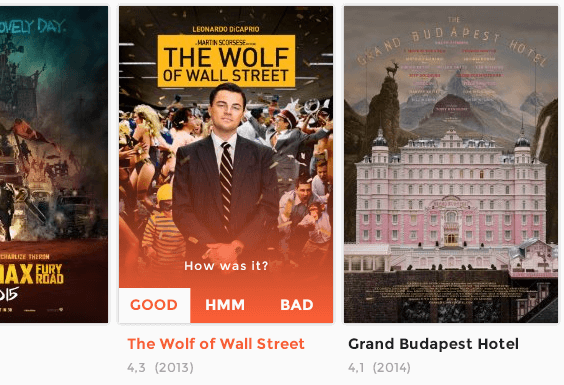

Look at the movie recommendation system UI we designed below. For each movie displayed, you can set if you like it or not. It collects vast amounts of training data for the algorithm.

Also provide your users the opportunity to give feedback about the AI content.

On every screen where the app has made a recommendation or prediction, give the users the chance to give feedback easily and right away. It usually means one-tap feedback options displayed next to the AI content.

In Zendesk, a button next to the prediction report bad ones.

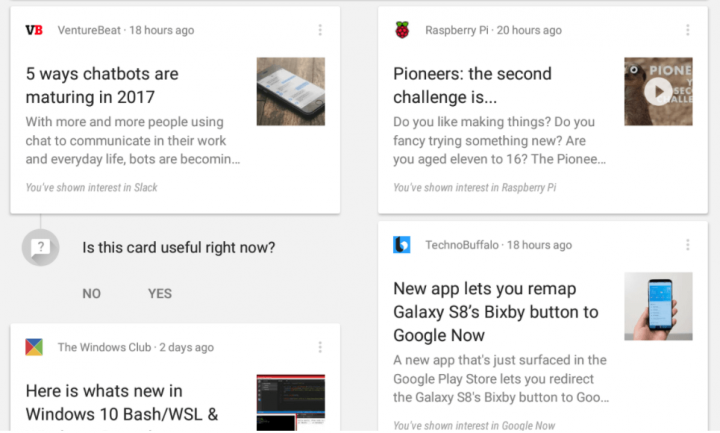

In Google’s feed, you could see questions below each card to provide feedback about the card’s usefulness from time to time. You can see they also found a great way to communicate how the algorithm works. They displayed the phrase the user has shown interest in to explain why they recommend a certain article.

Summary: The seven principles of AI UX

To sum it up, the seven things to do while designing AI UX:

- Distinguish AI content from normal content visually so people will know where the information is coming from.

- Explain how machines think so people will understand the results.

- Set expectations so people will know what they can or can’t achieve with the AI product.

- Find and handle edge cases so no weird or unpleasant things happen to your users.

- Help engineers with insights about people’s expectations and the right training data

- Test the AI UX with methods like the Wizard of Oz testing. Use the test participant’s own data when emulating AI content requires.

- Provide the opportunity for users to give feedback and add new training data to the system.

Designing AI products poses an exciting new challenge. Keep these seven principles in mind and you will probably succeed.

Have more thoughts about the relationship between AI and UX? Don’t keep it in! Let us know in the comment section below.

Want to learn more about our design process? We wrote a Product Design Book about it! Order it today.

Are you a designer working a good UX portfolio? We have good news for you. We built uxfol.io, a portfolio design tool that helps you tell the whole story behind your design. It’s awesome, check it out!

(Cover image source: Aerolab)